Successful software projects please customers, streamline processes, or otherwise add value to your business. But how do you ensure that your software project will result in the improvements you are expecting? Will users experience better performance? Will the productivity across all tasks improve as you hoped? Will users be happy with your changes and return to your product again and again as you envisioned?

You don’t find answers to these questions with a standard QA testing plan. Standard QA will ensure that your product works. Usability testing will ensure that your product accomplishes your business objectives. Well planned usability testing will shed a bright light on everything you truly care about: workflow metrics, user satisfaction, and strength of design.

How do you know when to start usability testing? Which usability tests are right for your product or website? Let’s examine the six types of usability testing you can use to improve your software.

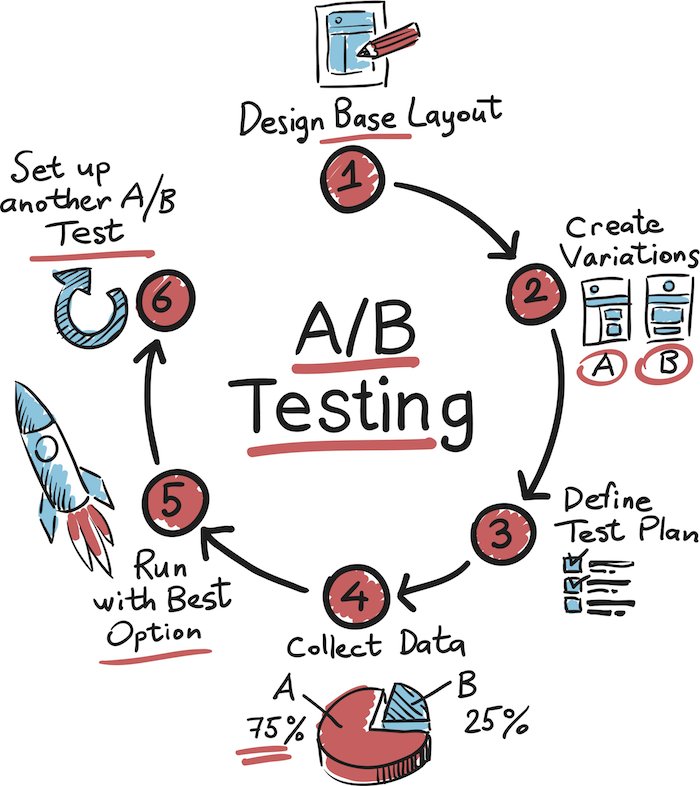

A/B testing

A/B testing generally applies to websites or landing pages. Two separate designs (A and B) are tested over a period of time. Then data is collected on their performance. Lead generation or conversion of a sale on a product is generally the goal. If the analytics show that design A or B converted the user at a higher rate, then it is declared the winner, the other design is retired, and we move on to additional split tests—always trying to improve conversion rates. There are many third-party solutions that will help run this type of usability testing. In fact it is hard to run these test without a third-party tool such as Optimizely.

How specific should a good A/B test be? Change one element at a time. To really comprehend why one design is better than the other demands specificity. Clearly define the goal of the tests, the scenario for the user, the problem the user may encounter, and the two separate solutions (A and B). A checklist:

- Define a specific problem, such as the user failing to complete the process of submitting the form.

- Define a specific goal, such as increasing user form submittal.

- Define the tests. Design A may be one location for the submit button, while design B will be a different location.

- Define the duration of each test with specific forms of measurement, such as a three-week test run on 500 users.

Your test conclusion statement may look something like this:

Over the course of three weeks two tests were run with an average of 500 user interactions. Each design was divided equally over the total user population. The test conclusion is that the orange-centered button under the form has a higher submittal rate of 76 percent compared to that of the blue-centered button of 46 percent. Design B with the orange-centered button is the winner.

A/B testing is efficient and clear-cut, providing measurement data that equates to specific design winners. It is important to understand that A/B testing is a process, and it takes time to see results. There are also multivariate tests that can be run in a similar format as A/B testing. The main difference is that several different design elements may be tested against each other to declare a design winner. A multivariate test is not as accurate as an A/B test but can nevertheless prove useful in evaluating designs.

SURGE

Design prototype testing

Prototype testing can be used for testing a complete user workflow in a wireframe or fully designed portion of a product before it goes into development. This will often be referred to as early-stage testing. A UX/UI designer will create the prototype and design workflows. Prototype testing will assist in fixing usability issues before development engineering begins. Some guidelines to follow when starting a prototype test:

- Define the budget and goals for the test.

- It is best to rely on early-stage tests to reveal specific areas that may need improvements.

- Choose a prototyping tool. Axure is one solution but there are several prototyping tools on the market that UX/UI designer may use.

- Choose a measuring tool for the prototype to gather the analytics from the users. The team managing the test will have to become familiar with this tool and learn how to measure the testing. Loop11 is one example of a good measuring tool.

SURGE

SURGE

Formative usability testing

Formative usability testing is another form of early-stage testing. It focuses more on quality assurance. The product goes through acceptance criteria testing before being released to target audiences. This test should occur before the first release of the developed product. It then becomes the baseline for comparing future tests. A foundation or building block would be another way to look at this type of testing. Formative usability testing may follow this process:

- Product may do a soft launch (v.0.5) before it is released.

- Gather beta test groups to perform the defined usability tests.

- Test cases may need to be written to guide the users through specific test goals.

- Choose a third-party tool such as Optimizely to help run the test and gather analytics.

- Review the analytics and make business decisions for the product design.

- Revise designs and address usability issues before the official launch of the product.

- Run additional tests to continually improve the product over time.

Summative usability testing

Summative usability testing occurs later in development. This is generally performed on defined user groups. The goal of summative usability testing is to determine if the execution of the design actually meets the goals of the product. This testing should produce accurate statistical measurements of usability. The product should have already been through the formative testing phases, and the resulting insights should have guided decisions for the summative testing phases. Summative testing generally follows the same flow as formative usability testing, but with greater attention to detail and more user experience test results. Summative usability testing is a process that should align with every new release of your product.

Eyetracking technology

This is a very specific type of test that places a user in front of a camera while they are using the product or website and tracks the user’s eye movement and gazes. It generally requires a third-party software tool like iMotions.com, which will also track the user’s keystrokes and mouse movements. These tests are normally performed on a defined group of 10 or more users, then the data is analyzed and decisions are made about how design and UX performance can be improved. This process can be costly, which may be one reason this type of usability testing has been on the decline.

Questionnaires

Questionnaires are not as numerically grounded and precise as other forms of testing, but they do provide general feedback from user groups. And because they allow you to collect a large amount of information in a short amount of time, they can be a more economical solution. Questionnaires can be completed by a small testing team with little experience, and easy third-party tools like www.surveymonkey.com are available to help create and conduct the surveys. The biggest difference between questionnaires and other forms of usability testing is that they are a collection of formulated opinions. The validity of the results could be compromised by any number of variables such as a subjective researcher or a respondent who misinterpreted a question.

It’s important to remember that usability testing is a valuable investment in your software product. No project meets its goals unless those goals are clearly defined and measured against. Remember too that usability testing is often not addressed early enough in product or website development. Early-stage testing can save both time and money and will help make your product a success.

Collette Stumpf is a software designer at Surge specializing in systems user experience and user interface (UX/UI) design.

—

New Tech Forum provides a venue to explore and discuss emerging enterprise technology in unprecedented depth and breadth. The selection is subjective, based on our pick of the technologies we believe to be important and of greatest interest to InfoWorld readers. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Send all inquiries to newtechforum@infoworld.com.