Many Python developers—and developers in other languages, too—enjoy the developer experience of notebooks, such as Jupyter. They are a useful tool for developing, but also for sharing code, comments, and results in one handy web-based experience. Jupyter and other notebooks have become a common tool, including among machine learning and data science users, which is why it’s no surprise that Amazon SageMaker includes a notebook offering.

Python is one of the main languages we see in use with Amazon DocumentDB (with MongoDB compatibility). In this post, we demonstrate how to use Amazon SageMaker notebooks to connect to Amazon DocumentDB for a simple, powerful, and flexible development experience. We walk through the steps using the AWS Management Console, but also include an AWS CloudFormation template to add an Amazon SageMaker notebook to your existing Amazon DocumentDB environment.

Solution overview

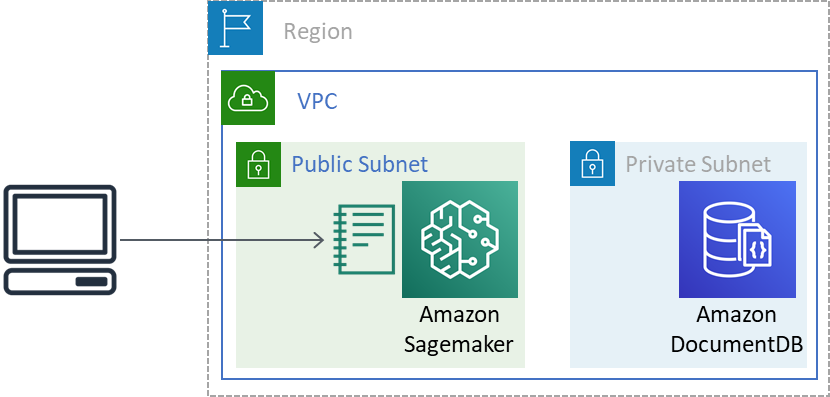

The following diagram illustrates the architecture of our solution’s end state.

In the initial state, we have an Amazon DocumentDB cluster deployed in private subnets in an Amazon Virtual Private Cloud (Amazon VPC). Also included in the VPC is a public subnet and a security group that has access to Amazon DocumentDB. This is a common configuration that might also include an Amazon Elastic Compute Cloud (Amazon EC2) machine or an AWS Cloud9 environment, as is described in the Part 1 and Part 2 posts in this series. For instructions on creating your environment, see Setting Up a Document Database.

From this starting point, we add an Amazon SageMaker notebook to the public subnet and connect to Amazon DocumentDB.

Creating an Amazon SageMaker notebook lifecycle configuration

To simplify the environment for our notebook, we use a lifecycle configuration. A lifecycle configuration is a mechanism that can install packages, sample notebooks, or otherwise customize your instance environment. We use the lifecycle configuration mechanism to install the Amazon DocumentDB Python driver pymongo, and to download the CA certificate file for Amazon DocumentDB clusters, which is necessary for connecting using TLS.

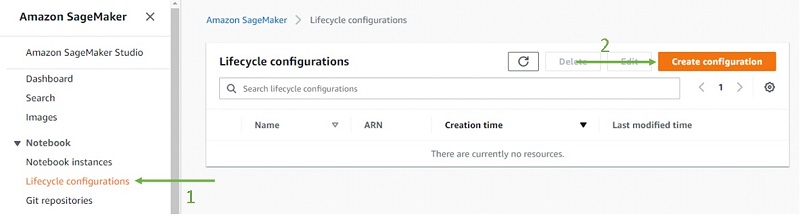

- On the Amazon SageMaker console, under Notebook, choose Lifecycle configurations.

- Choose Create configuration.

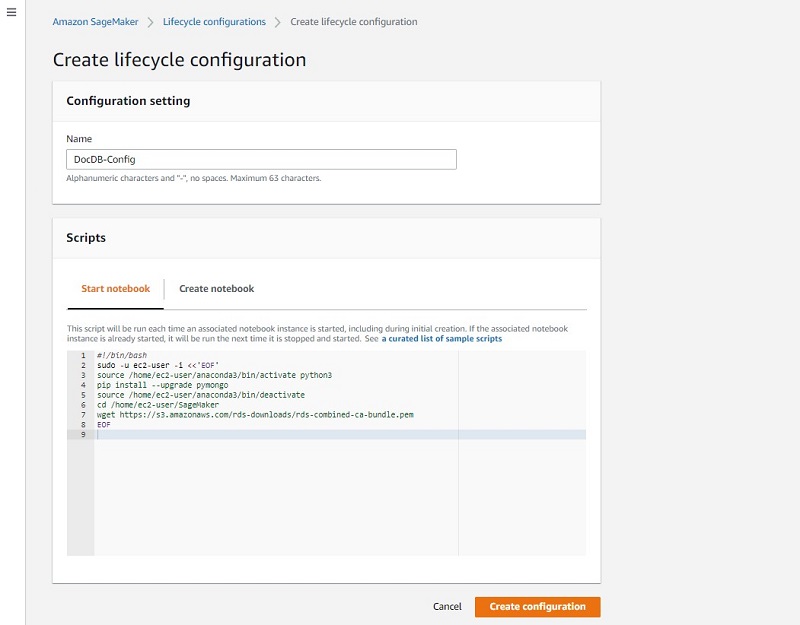

- For Name, enter a name (such as

DocDB-Config). - In the Scripts section, on the Start notebook tab, enter the following code:

- Choose Create configuration.

Creating an Amazon SageMaker notebook instance

Now we create the notebook instance itself.

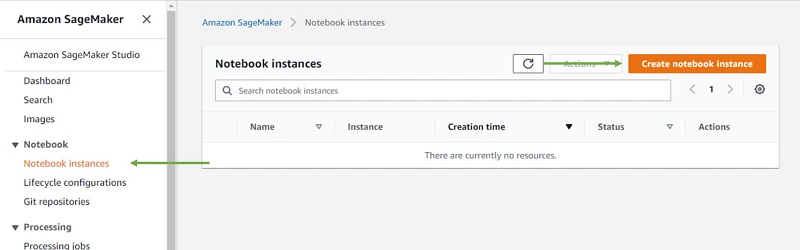

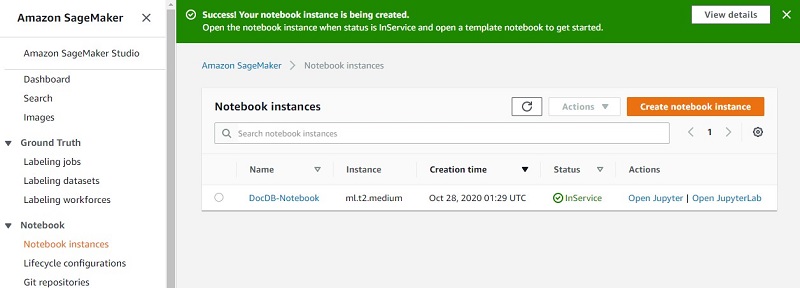

- On the Amazon SageMaker console, under Notebook, choose Notebook instances.

- Choose Create notebook instance.

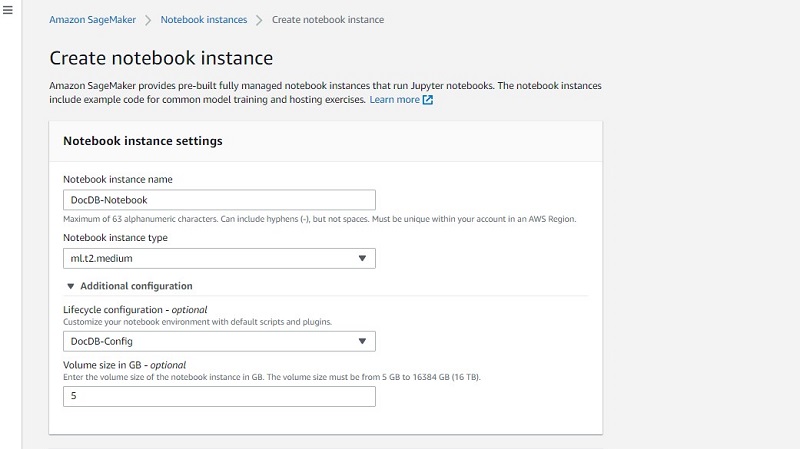

- For Notebook instance name¸ enter a name (such as

DocDB-Notebook). - For Notebook instance type, choose your instance type (for this post, choose t2.medium).

- Under Additional configuration, for Lifecycle configuration, choose the configuration you created (DocDB-Config).

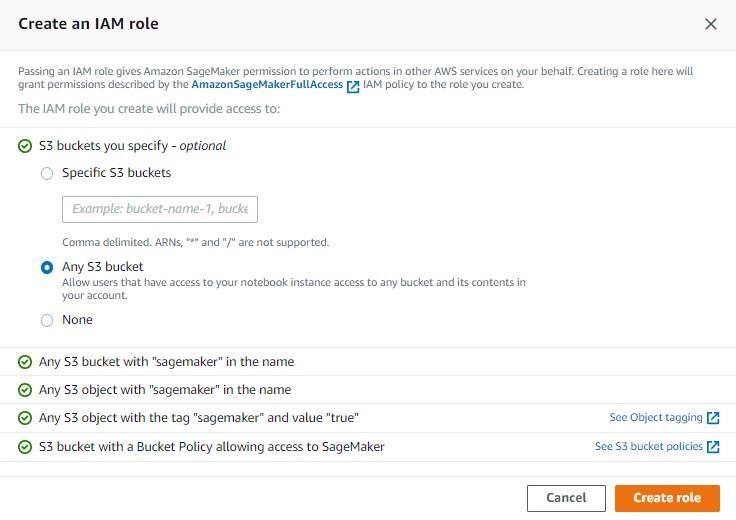

- For IAM role¸ choose Create a new role.

- In the Create an IAM role pop-up, select Any S3 bucket.

- Leave everything else at its default.

- Choose Create role.

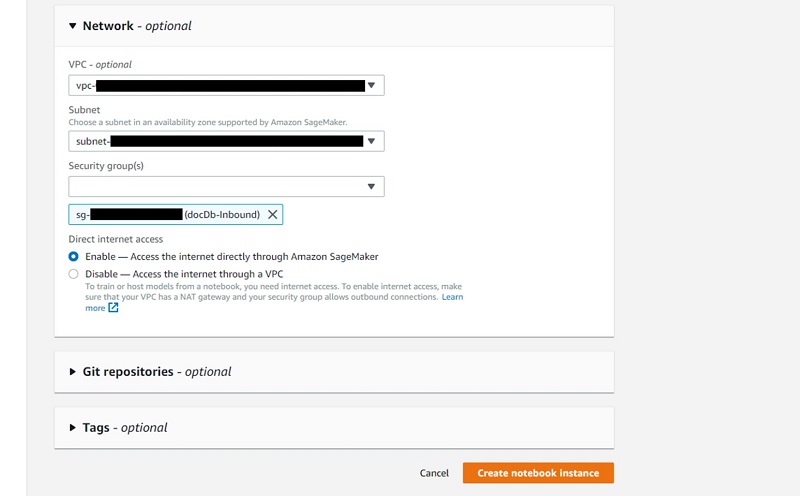

- Under Network, for VPC, choose the VPC in which your Amazon DocumentDB cluster is.

- For Subnet, choose a public subnet.

- For Security group(s), choose a security group that provides access to your Amazon DocumentDB cluster.

- Choose Create notebook instance.

It may take a few minutes to launch this instance. When it’s ready, the instance status shows as InService.

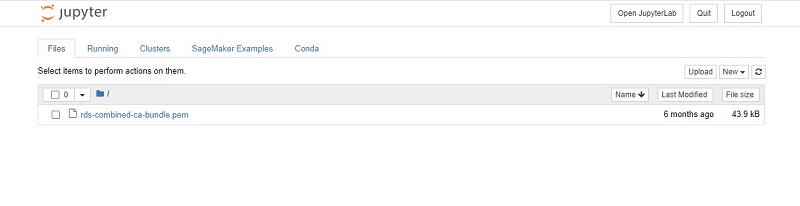

- Choose Open Jupyter, which opens a new window or tab.

Importing the example notebook

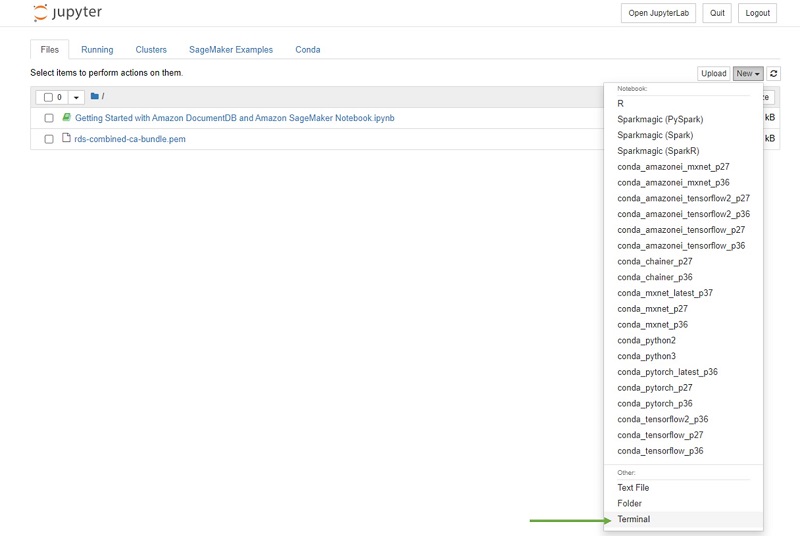

You can now create a new notebook from the New menu. Amazon SageMaker supports a variety of pre-built environments for your notebook, ranging from basic Python 3 environments to more advanced environments with tools like TensorFlow or Apache Spark dependencies pre-loaded.

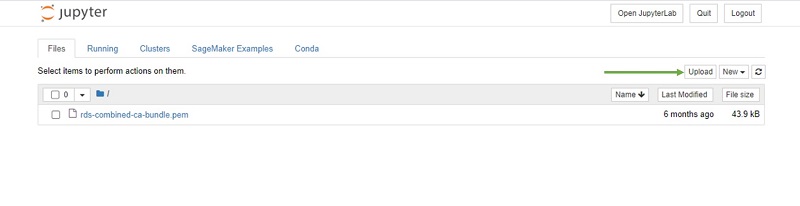

- For this post, import the example Jupyter notebook.

- Save the file locally.

- Choose Upload.

- Choose the local copy of the example notebook.

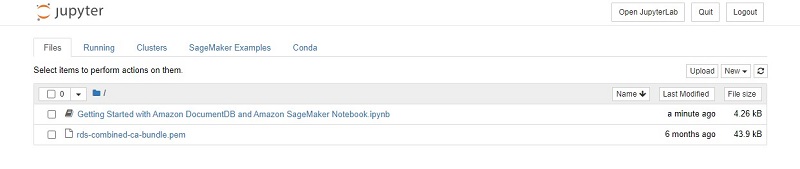

You should see the Getting Started with Amazon DocumentDB notebook listed.

- Choose Upload to upload the notebook file to Amazon SageMaker.

- Choose Getting Started with Amazon DocumentDB and Amazon SageMaker Notebook.ipynb to open the notebook.

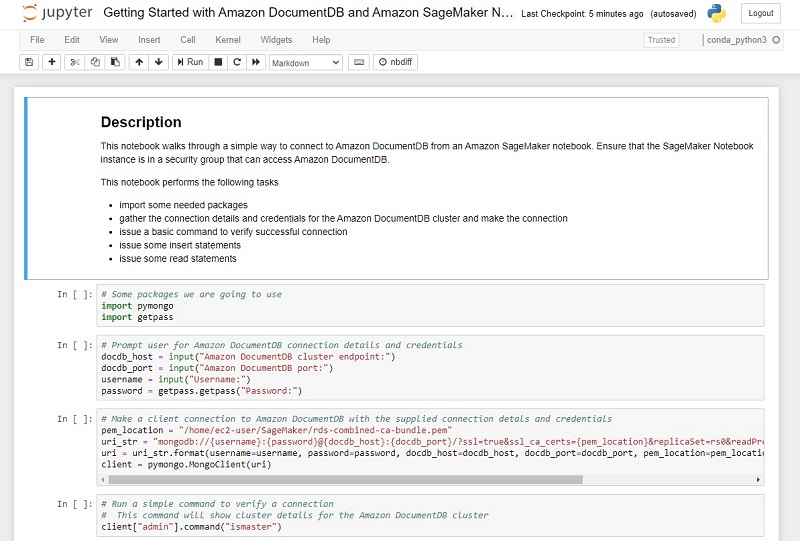

This sample notebook demonstrates a few simple operations:

- Import some needed packages

- Gather the connection details and credentials for the Amazon DocumentDB cluster and make the connection

- Issue a basic command to verify a successful connection

- Issue some insert statements

- Issue some read statements

You can run the notebook cell by cell and provide the Amazon DocumentDB information when prompted.

Terminal access

For those of you who like to use command-line tools or have additional tools beyond Python, Amazon SageMaker notebook instances can also open a terminal session. On the Jupyter main page, from the New menu, choose Terminal.

This opens a new tab in your browser with a terminal session.

This provides terminal access to the Amazon SageMaker instance that you created. You could, for example, install utilities like mongoimport for bulk loading of data.

Cleaning up

If you used the CloudFormation template, you should be able to delete the stack, which deletes the Amazon SageMaker notebook instance and the Amazon SageMaker lifecycle configuration, as well as the IAM role used to run the notebook instance.

If you performed these steps manually, you need to use the AWS console to remove the following components:

- Notebook instance

- Lifecycle configuration

- IAM role we created when making the notebook instance

Summary

In this post, we demonstrated how to connect an Amazon SageMaker notebook instance to Amazon DocumentDB, thereby bringing this powerful development environment together with the power of Amazon DocumentDB. For more information, see Ramping up on Amazon DocumentDB (with MongoDB compatibility). For more information about recent launches and blog posts, see Amazon DocumentDB (with MongoDB compatibility) resources.

About the Author

Brian Hess is a Senior Solution Architect Specialist for Amazon DocumentDB (with MongoDB compatibility) at AWS. He has been in the data and analytics space for over 20 years and has extensive experience with relational and NoSQL databases.

Brian Hess is a Senior Solution Architect Specialist for Amazon DocumentDB (with MongoDB compatibility) at AWS. He has been in the data and analytics space for over 20 years and has extensive experience with relational and NoSQL databases.