MySQL highly available solutions, InnoDB Cluster (it uses InnoDB storage engine and is based on Group Replication plugin) and NDB Cluster (NDB storage engine), offer high scalability and redundant topologies.

- InnoDB Cluster can be configured with up to 9 replicas, in single primary configuration or multi-primary.

- NDB Cluster instead, while being a much different solution, offers the chance to have up to 4 data nodes replicated for a single shard of data (or node group) and up to a total number of 144 nodes.

Redundancy ensures that multiple copies of data exist and are available transparently to the client. That prevents service disruption and lower risks of data loss caused by hardware malfunctioning. But this is not still enough in case of full data center outage or natural disasters. That’s when it is sometimes needed to design geographically redundant solutions. Several options are possible.

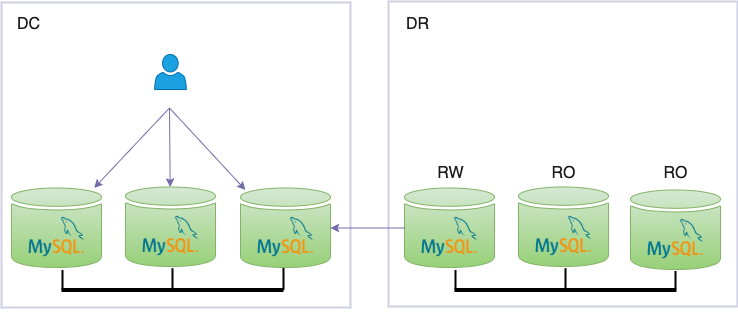

InnoDB Cluster to standalone MySQL Server

This architecture requires minimal maintenance.

- InnoDB Cluster is deployed normally in DC, clients access through MySQL Router

- Disaster Recovery (DR) site hosts a MySQL Server and a MySQL Router instance bootstrapped against the InnoDB Cluster in DC.

- MySQL Server instance in DR is a classical asynchronous replica configured with CHANGE MASTER TO, but selecting as source MySQL Router’s R/O IP and port.

This solution requires little maintenance: MySQL Router will fetch events from the cluster and deal with exclusion of unavailable instances, thus selecting a good source of data, transparently. Replica in DR will be updated at any moment (minus events not replicated because of replication lag, this is asynchronous replication, best option to replicate data across high latency sites or regions, but subject to the effects of latency).

Group Replication to Group Replication

This solution is based on Group Replication, the core of InnoDB Cluster. Two clusters are deployed in the two sites and synchronised via the traditional asynchronous replication channel.

- One DR cluster instance can be configured as replica (CHANGE MASTER TO) must it be the primary instance: only primary instances can accept writes and introduce them into the cluster (in case of DR multi-primary instance, any instance can be chosen as replica).

- This architecture needs manual maintenance if any of the instances chosen as source and replica fail. If DC source fails, DR replica must reconfigure it. If DR replica fails, new DR primary must be reconfigured against the source.

- Compared to previous DR standalone solution, clients can point to DR cluster without any expected degradation, especially for what concerns distributed reads.

- MySQL Router cannot be used with Group Replication, because MySQL Router relies on cluster metadata (which is the main difference between InnoDB Cluster and Group Replication).

- InnoDB Cluster stores local metadata schema, which is a local cluster characteristic. For this reason, to preserve local metadata from being overwritten and corrupted by the replication channel, replication between InnoDB Clusters is not possible.

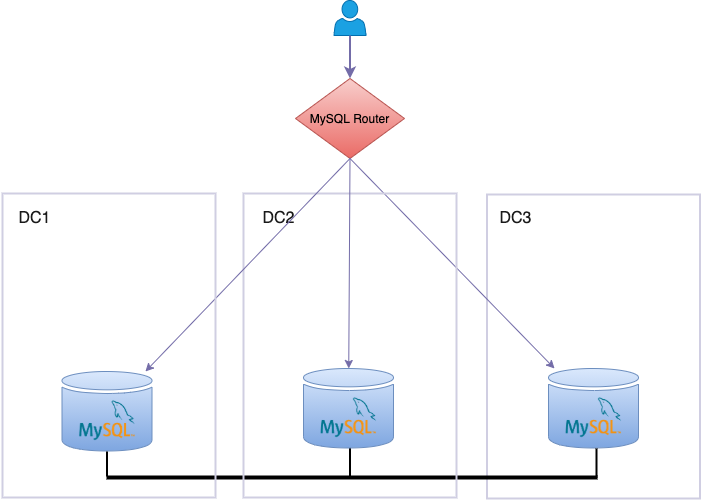

InnoDB Cluster stretched across data centers

It is also possible to prevent catastrophic failures with the standard InnoDB Cluster deploying every instance in a data center.

This approach, the simplest possible, is sensitive to latency between the data centers. Every distributed system requires low latency, preferably single digit ms latency between the data centers/ sites, and a stable connection, otherwise this will generate a poor response and a degradation of the service. Nowadays cloud providers offer acceptable latency between data centers in same site, and also good latency across data centers in the same geographic area (though latency between regions is unacceptable).

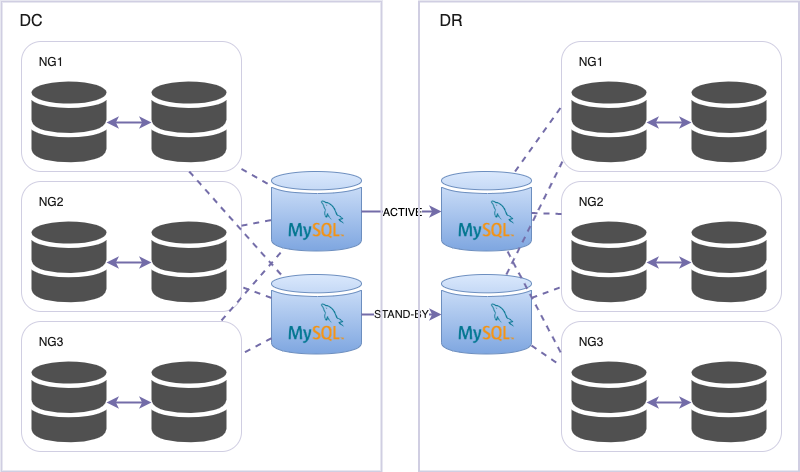

NDB Cluster to NDB Cluster

I have shown several options using InnoDB Cluster, now I will briefly introduce NDB Cluster geographical redundancy. NDB Cluster supports replicating clusters via the traditional MySQL Servers (also known as SQL nodes, said in NDB Cluster terminology).

- Replication model requires minimum one (recommended two, as in the picture) SQL node per cluster per site, forming part of the respective cluster.

- In DC, every SQL node has binary logging enabled and subscribes to changes in the NDB data nodes.

- In DR, one SQL node is configured as replica of one source SQL node in DC, hence replicates events and apply them to NDB storage engine.

- This architecture envisions a pair of replication channels, one active at time, to prevent loss of events if the binlogging SQL node fails or is disconnected from the cluster. Failover of replication channels must be executed manually.

The post Geo-Redundancy for MySQL InnoDB And NDB Clusters appeared first on mortensi.