Amazon Aurora combines the performance and availability of commercial databases with the simplicity and cost-effectiveness of open-source databases. An Aurora DB cluster consists of one or more DB instances connected to a shared and distributed Aurora storage volume.

MySQL database major version upgrades can contain database changes that may not be backward compatible with existing applications. As is common for open-source MySQL databases, you must manually perform a major version upgrade. You can perform a major version upgrade in Aurora MySQL by restoring the snapshot of your current database on to a new major version. The purpose-built storage of Aurora parallelizes snapshot restores and can support faster upgrades compared to community edition MySQL databases. However, very large snapshots may take much longer to restore, introducing considerable downtime during the upgrade process, which may not be suitable for all applications.

This post shows how you can perform a major upgrade for Aurora MySQL with minimal downtime using a blue-green deployment. This is useful for database administrators or DevOps team members responsible for the Aurora MySQL upgrades. You can use blue-green deployment for an Aurora MySQL major version upgrade for different types of Aurora MySQL clusters that support binary logs, including Aurora MySQL Global Database. You can also use this solution for any major database reorganization or changes with minimal impact on the primary database.

Solution architecture

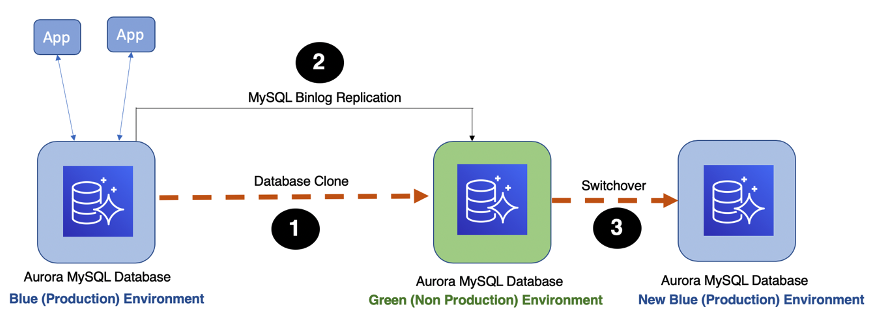

In a blue-green deployment, the blue environment represents the current database version serving production traffic. In parallel, the green environment is staged running a different version, with schema changes like new or modified indexes on a very large table. The desired change is applied on the green database environment only.

Blue-green deployment allows you to make these database changes on the green environment without impacting the blue environment, and test with the current production data set and rollback capabilities. The blue environment database changes made by the production applications in the blue environment are continually replicated to the green environment using the MySQL binary log replication. After the green environment is ready and tested, the production traffic is redirected from the blue to green environment. The MySQL binary log replication is the key process to replicate data between these two environments and allows you to perform database environment switchover with minimum downtime.

For generic database change management processes such as minor version upgrades, application changes, and data reorganization, you can use blue-green deployment with the fast database cloning or snapshot feature, but fast database cloning is faster to create a database copy quickly, easily, and cost-effectively. The steps are as follows:

- Apply the changes on the green environment and test the application.

- During downtime, stop the application and make sure all the changes are applied on the green environment.

- During the database switchover process, redirect the production traffic from the blue to green environment.

The following diagram illustrates this architecture.

For more information about cloning databases, see Creating an Aurora clone through the AWS Management Console.

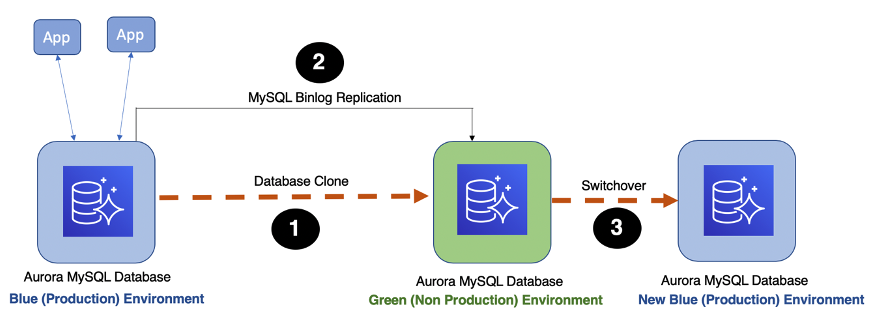

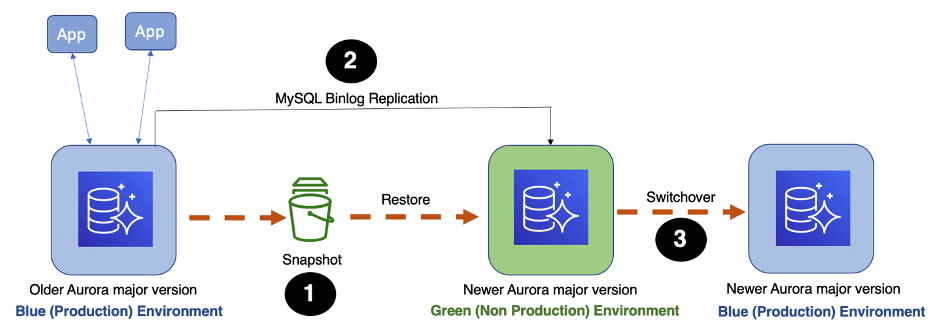

However, for the Aurora major version upgrade procedure, you need to use a snapshot. The following diagram illustrates this updated architecture.

The updated architecture includes the following steps:

- Take a snapshot from the older Aurora major version (blue environment) and restore it as a newer Aurora major version (green environment). For generic database change-management processes, use the database clone process to create a green environment.

- Set up manual MySQL binary log replication between the Aurora clusters as shown in the architecture to replicate data changes from blue to green environments.

- During the planned downtime window when the green environment is ready for switchover, stop the application blue environment and start using the green environment as new the blue environment.

Prerequisites

Before you get started, enable binary logging on the blue environment. This step is applicable only if the current blue environment is running with binary logging disabled. You need to modify the existing custom DB cluster parameter group or create a new custom DB cluster parameter group and assign it to the blue environment. Associate the newly created DB cluster parameter group to the Aurora DB cluster by modifying the Aurora DB cluster.

The use of a MySQL binary log may cause performance degradation for the database write operations, and more storage and I/O load due to the additional binlog writes and synchronization. It can also impact the database recovery process due to the recovery of the binlog file for all uncommitted transactions during the database crash recovery. We strongly recommend testing the application in a non-production environment using the MySQL binary log with a production representative workload before turning the binary log on in the production environment.

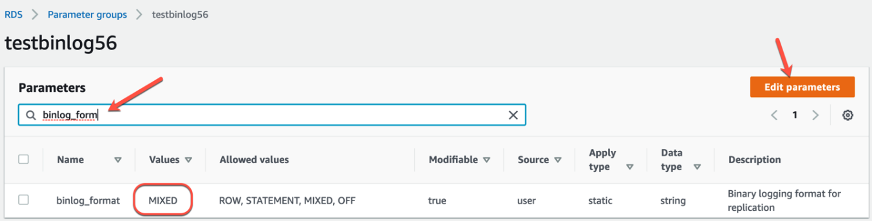

If you’re currently running the database in binary log mode, then no change to the binlog related parameter is required. If a change is required, complete the following steps:

- Open the custom cluster-level parameter group and modify the

binlog_formatparameter as MIXED (you can also use other formats like ROW or STATEMENT, but it’s recommended to set it as MIXED).

For more information, see Setting the Binary Log Format and Advantages and Disadvantages of Statement-Based and Row-Based Replication on the MySQL website.

- Restart the Aurora writer DB instance to implement the

binlog_formatparameter-related change. - After the Aurora writer DB instance reboots, validate the binlog file configuration. It should show the binlog file name and position as shown in the following code:

Next, you need to change the binary log files’ retention period to 24 hours or longer — Amazon Aurora MySQL normally purges a binary log as soon as possible. Change the binlog retention period to long enough so you can perform the snapshot restore and upgrade process. It’s best not to keep binary logs longer than necessary, so you should adjust the retention period according to your use case. To set the binary log retention time frame in hours, use mysql.rds_show_configuration and mysql.rds_set_configuration.

- Validate the existing configuration:

- Change the current binary log files’ retention period:

- Validate the binary log retention after the change:

You’re now ready to upgrade using blue-green deployment

Taking a manual snapshot and restoring it as the newer version

You first take a manual snapshot from the older Aurora MySQL major version cluster and restore the snapshot as the newer Aurora MySQL major version cluster.

- Take a manual snapshot of the current production Aurora MySQL DB cluster (blue DB cluster).

- Create parameter groups that are compatible with the Aurora MySQL newer major version and have the same functional parameters used in the Aurora MySQL older major version (to the extent that they apply to the Aurora MySQL newer major version).

- Restore the blue environment-based manual snapshot in the green environment with the latest or desired Aurora MySQL newer major version.

Setting up binary log replication

You now set up MySQL binary log replication manually between the blue and green environment clusters.

You first capture the binary log position from the AWS Management Console from the green environment cluster.

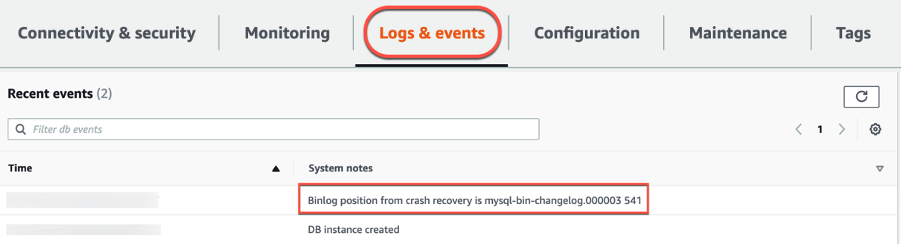

- After the snapshot is restored as a green environment cluster, record the MySQL binary log file name and position on the green environment cluster, which you use for manual replication setup between the blue and the green clusters.

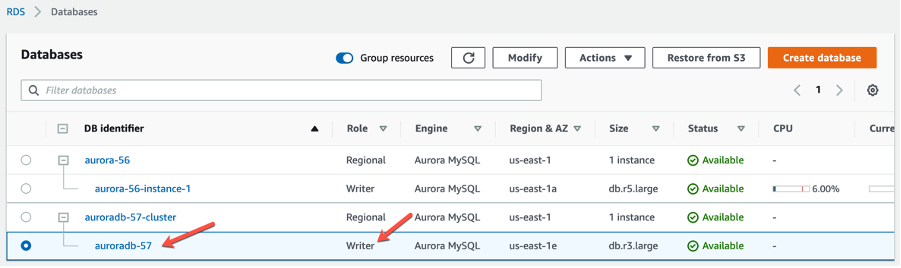

The following screenshot shows the cluster on the Databases page.

The following screenshot shows the binlog file name and position on the Logs & events tab.

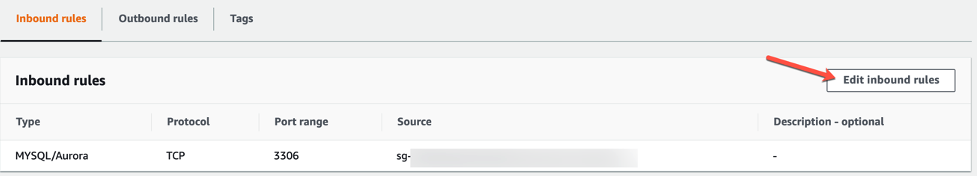

You can now modify the existing security group, which is associated with the blue environment cluster, to allow database access for the green environment cluster replication process, if needed.

- On the Inbound rules tab, choose Edit inbound rules.

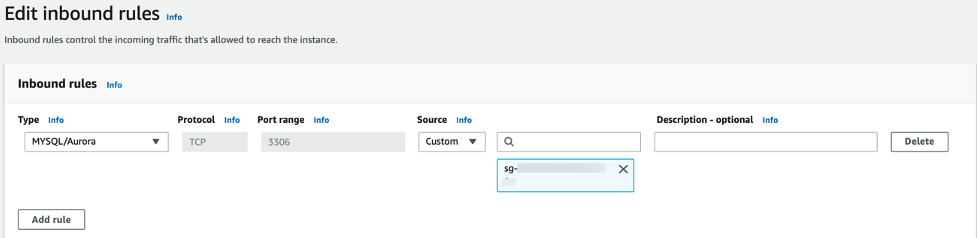

- Add a rule by adding the inbound rule type MySQL/Aurora and ingress permission for the security group ID of the green environment cluster node as source.

- Save the changes and choose to apply them immediately.

- Create a replication database user and set up manual MySQL replication between the blue and the green environment clusters. See the following code:

For security reasons, use this user only for replication.

- Grant

REPLICATION SLAVEprivileges to your replication user:

- Connect to the green environment cluster endpoint and run a SQL command to configure manual MySQL replication by using mysql.rds_set_external_master. Use the MySQL binlog file name and position info from the log file as mentioned previously:

- Start the MySQL replication process on the green environment cluster by using mysql.rds_start_replication:

- Validate the MySQL replication status by using show slave status, and make sure the replication process is up and running without any error (especially make sure that

Slave_IO_RunningandSlave_SQL_Runningshow the valueYes). See the following code:

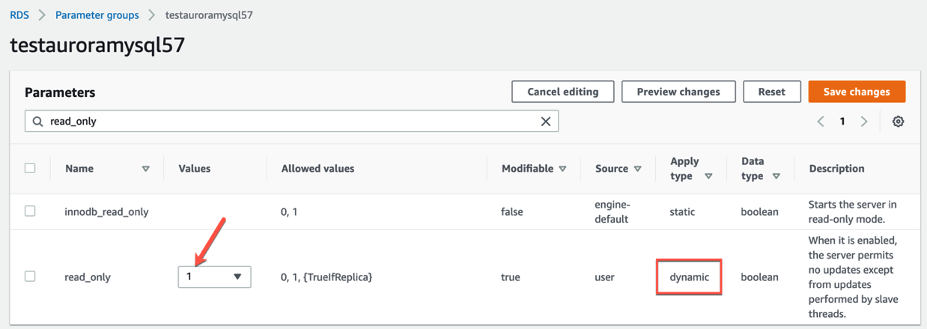

Because the green environment cluster is configured as a replica, make sure the writer DB instance is running on read-only mode to avoid any write operations.

- To set the writer instance to read-only mode, modify the custom parameter group that is assigned to the instance. The variable name

read_onlyis set to1. - Apply the changes immediately because it’s a dynamic apply type, which means that the change takes effect immediately and doesn’t require a reboot.

- Validate the DB instance mode after the change is applied. The

read_onlyvariable value should show asON:

Performing pre-switchover tasks

Before the production database switchover, you must perform a few additional tasks.

Confirming database infrastructure readiness

Make sure the green environment cluster is configured with the correct instance type and number of read replicas, including cross-Region replication, custom DB parameters groups, and security groups. Confirm that all other configurations are correct and consistent to avoid any performance, capacity, and accessibility issues after switching over to the green environment cluster for application use.

If you need to be able to roll the Aurora MySQL cluster back to the previous version, enable the MySQL binary logging on the green environment with a retention period of a few hours (as described earlier). This helps you set up reverse MySQL replication after the database switchover.

Testing the application

Test the application and critical DB operations using the green environment cluster. You can use the Aurora database clone feature to create a database copy and test the application and other critical DB operations.

Prewarming the database cache

If the application is very sensitive about database performance, you should prewarm the database cache (buffer pool memory) on the green environment cluster by running the top SELECT queries (before the database switchover process, if possible). You can also prewarm the green environment database read replica database cache by using its read replica for the production application, which may require some changes to application database connection management.

Switching the database over to the green environment cluster

Before the application stops and the downtime process starts, monitor replication lag and ensure that the replica can keep up without significant lag, as shown earlier using show slave statusG.

To avoid possible data inconsistency and a split-brain situation, never write to both cluster servers at the same time. Replication doesn’t automatically overwrite the changes or recover from them, and it may be difficult or impossible to recover manually.

Stopping the application process and changing the database mode

To stop the application and change the database mode, complete the following steps:

- Stop the all the application processes and make sure all the write activities are stopped on the blue environment and there is no application database connection.

If you can’t easily stop application writes, you can work around it by blocking application access to the database (for example, through security groups, if only the blue environment cluster uses it).

- To avoid any write operations, turn the

read_onlydatabase mode toONfor the blue environment by changing the database parameterread_onlyvalue from 0 to 1 on the writer database instance (as shown earlier). - Validate that the database mode

read_onlyis set toON. See the following code:

Ending the replication process

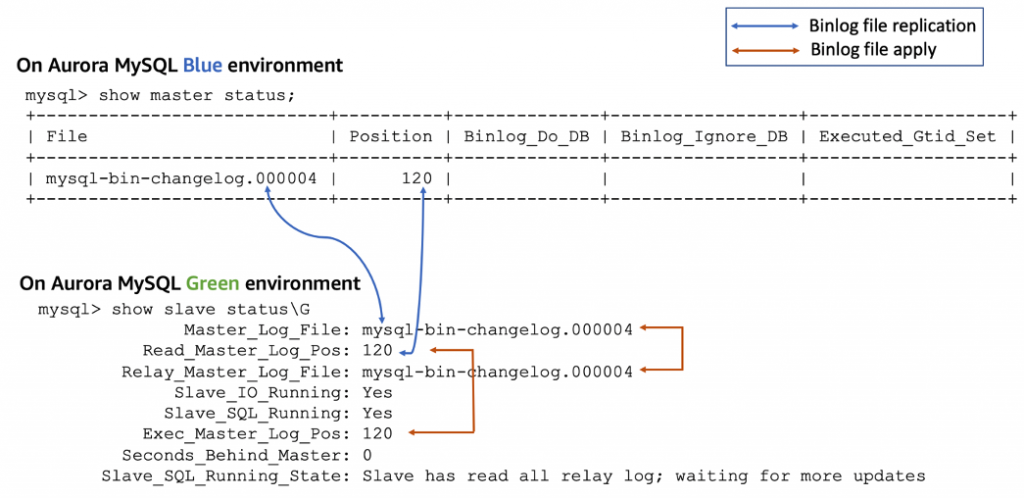

Before ending the replication process, make sure all the binlog files and events are replicated from the blue to the green environment cluster and applied on the green environment cluster. The file names and positions must match, as in the following diagram.

Also, confirm the following values:

- Slave_IO_Running – Yes

- Slave_SQL_Running – Yes

- Seconds_Behind_Master – 0

- Slave_SQL_Running_State – Has read all relay logs; waiting for more updates

For more information, see SHOW SLAVE STATUS.

If there is any replication lag, wait until the replication catches up.

You’re now ready to stop the replication process on the green environment

- End the replication with the following code:

- To remove the MySQL replication configuration information, use rds_reset_external_master:

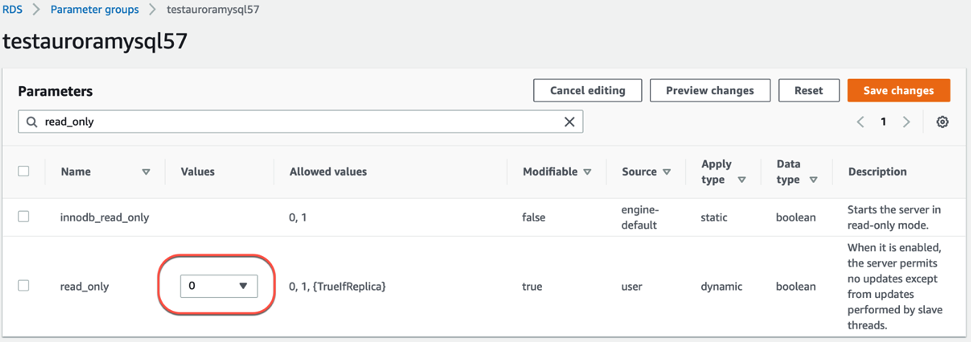

- Turn the

read_onlydatabase mode for the green environment cluster toOFFby changing the custom database parameter group on the writer database instance from 1 to 0.

- Apply the change immediately.

- Validate the database mode

read_onlyis set toOFF:

Now the Aurora green environment cluster is ready for application use.

- Run following command and record the MySQL binlog file coordinates before starting the application, which you use to set up reverse MySQL replication:

- Reconfigure all the applications to use the green environment cluster endpoints and start the application.

Performing post-upgrade tasks

After the upgrade, set up the reverse MySQL replication between the new blue (old green) and new green (old blue) database environments using the MySQL binlog file coordinates captured before starting the application and mysql.rds_set_external_master described earlier.

This helps you roll back to a previous database version, if needed. MySQL officially doesn’t support replication from a newer major version to an older major version, so the reverse replication may be prone to bugs or errors. Make sure you don’t use new database features that are available only in the new database version; this avoids MySQL replication errors and losing the ability to roll back to the previous version.

STOP all the blue environment cluster instances to minimize costs at least one full workload cycle (whether the cycle is daily, weekly, or monthly). You can then DELETE the blue environment cluster DB instances.

Summary

This post showed the detailed step-by-step procedure and best practices for an Aurora MySQL major version upgrade using blue-green deployment with a controlled database downtime period, which is usually less than a minute. The solution allows you to perform most of the tasks, including database upgrades, application testing, read replica setup, and other database infrastructure readiness tasks, before taking downtime to cut over in a more controlled way.

About the author

Rajesh Matkar is a Principal Partner Database Specialist Solutions Architect at AWS. He works with AWS Technology and Consulting partners to provide guidance and technical assistance on database projects, helping them improve the value of their solutions.

Rajesh Matkar is a Principal Partner Database Specialist Solutions Architect at AWS. He works with AWS Technology and Consulting partners to provide guidance and technical assistance on database projects, helping them improve the value of their solutions.