The attention and traction generated around the Istio service mesh technology in the past year is certainly intriguing. In fact, as I write this article, Istio is only at version 0.8 and yet, for two consecutive KubeCon/CloudNativeCon events, it has been a sizzling hot topic, with more than a dozen distinct sessions in the Denmark event alone. So why is this?

Before we dig into the reasons for Istio’s popularity, let’s introduce the subject of a service mesh. Something of a generic term, service mesh has been used interchangeably across a number of different contexts, such as to define methods of communication between different wireless devices, or to represent a system where individual applications can directly communicate with other applications. More recently, the term has been used to represent a network of applications or microservices, and the relationships and interactions between them. The latter is the focus of this article.

The fact that Red Hat has been involved in the cloud native and microservices space, especially with the direction that Red Hat OpenShift took on Kubernetes and Docker more than four years ago, has helped us to understand the importance of service mesh technologies, especially Istio. This article will explore four different reasons why I believe Istio is popular.

Microservices and transformation

Throughout the course of your career, or perhaps even in your current role, you have likely seen situations where the time between when code is written and when it gets deployed into production stretched so long that developers moved on to other projects and your feedback loop became unproductive or irrelevant. In order to help shorten that lead time, some companies decide to improve efficiency by breaking larger applications into smaller pieces, like functions or microservices. What was once a single application (read: package) with many capabilities is divided into individual packages that can be updated independently.

There is certainly value in that, but it has to be acknowledged that such scenarios also create a need for more governance on those individual services and the interfaces between them. As an example, relationships that were once defined as part of internal API calls within applications now have to move across the network.

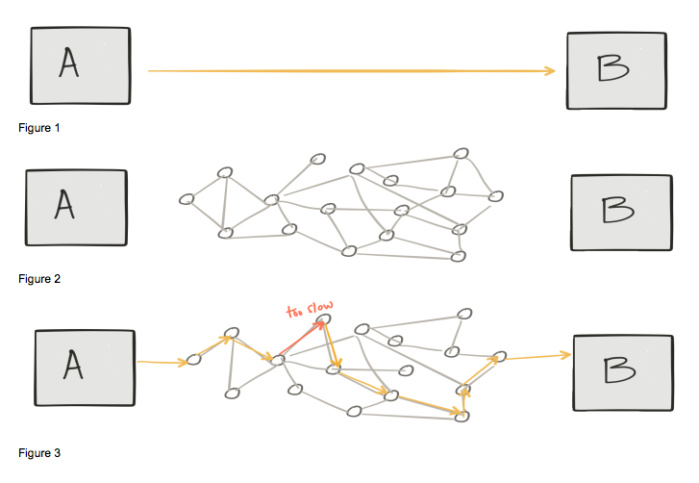

Christian Posta’s presentation “The hardest part of microservices: Calling your services” addresses an important point. When calling an API, you might be led to believe that you’re dealing with an A to B direct integration (figure 1 below). However, computer networks aren’t built to optimize for direct communication (figure 2), and inevitably you will have to deal with many different physical and virtual network devices outside your control, especially if you’re considering or using cloud environments. For example, it might be the case that one of those devices is experiencing less than optimal performance, affecting the overall application response time (figure 3).

Red Hat

Microservices pioneers and Netflix OSS

Some companies that were seemingly born for the cloud identified early on that in order to provide resilient services in the cloud, the application would have to protect itself from abnormalities in the environment.

Netflix created and later open sourced a set of technologies, mostly in Java, for capabilities such as circuit breaking, edge routing, service discovery, and load balancing, among others. These features gave applications more control over their communications, which in turn increased overall availability. To test and ensure the resilience of that approach, Netflix also relies on chaos engineering, which involves injecting a variety of real-world problems into a network of applications, disrupting the workflow at any given point in time. The combination of technologies developed by Netflix allowed its applications to participate in an application-focused network, which in fact is a service mesh.

At the time the Netflix OSS stack was created, virtual machines were essentially the only way to run applications in the cloud. Thus Netflix chose Java as the language platform for the service mesh capabilities.

Apart from the Java-only aspect of the Netflix OSS stack, another challenge presented was the fact that in order to achieve the service mesh capabilities, developers had to include the Java libraries in their applications and add provisions to the code to make use of such components. At the time, companies that wanted to enforce the usage of those technologies could not do it at the platform level.

With the arrival of Kubernetes, features such as service discovery and load balancing became part of the platform, allowing any application written in any language to take advantage of them. Through declarative and active state management, Kubernetes was also able to increase overall application availability by automatically restarting unresponsive applications. In today’s world, Kubernetes and containers are the de facto standards for running microservices applications.

In Kubernetes, your applications run in “pods,” which are composed of one or more containers. One of the techniques for running multiple containers in a pod is called a “side-car,” which is essentially another piece of software that runs in the same isolated space (pod) as your main application.

Kubernetes made conditions favorable for the rise of a technology like Istio. Lyft, the ride sharing company, was already working on an intelligent proxy called Envoy to deliver the resiliency and dynamic routing capabilities it wanted in microservices deployments. Side-car containers and Envoy allowed companies to attach a lightweight proxy to every instance of the application to support service discovery, load balancing, circuit breakers, tracing, and other features of a service mesh. Combine that with a control plane that adds governance to the configuration and management of instances of Envoy, and then you have Istio.

Embracing the distributed architecture

In the end, Istio and service mesh in general are about “embracing” the fallacies of distributed computing. In other words, Istio allows applications to assume that the network is reliable, fast, secure, unchanging, etc.—that the fallacies of distributed computing aren’t fallacies at all. It might be a stretch to say that Istio and Kubernetes solve all of those, but ignoring those fallacies might be one of the biggest mistakes an enterprise can make. Companies have to accept the fact that when you have multiple microservices and functions that interact with each other, you’re dealing with a distributed system.

Please see below the complete list of distributed computing fallacies and how Istio responds to them:

In my conversations with OpenShift customers, I often discover that they have implemented the same patterns developed by Netflix on their own, because they understood the need for those capabilities. I was also intrigued to find complete teams focused on those features. I often heard “We are the circuit breaking team” or “We are the service discovery team.”

Companies that made investments in creating their own service mesh capabilities now have an opportunity to use Kubernetes and Istio. By standardizing on these open source technologies, they gain access to features, knowledge, and use cases created by a larger community, helping to facilitate their journey toward more resilient applications and faster time to market at significantly lower cost.

Diogenes Rettori is the principal product manager for Red Hat OpenShift. Prior to joining the OpenShift team, he focused on customer education for Red Hat JBoss Middleware. Diogenes has a strong engineering background, having worked for companies such as Ericsson and IBM.

—

New Tech Forum provides a venue to explore and discuss emerging enterprise technology in unprecedented depth and breadth. The selection is subjective, based on our pick of the technologies we believe to be important and of greatest interest to InfoWorld readers. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Send all inquiries to newtechforum@infoworld.com.